Is Artificial Intelligence going to take over the world? What can A.I. really do? Is it a good resource for composing exciting new recipes or generating cat names or even creating modern art? Is it too smart or not smart enough, and what are the dangers?

These are all topics explored in one of this month’s featured books from The Next Big Idea Club (led and promoted by some of our favorite thinkers in the realm of social science, including Daniel Pink, Malcom Gladwell, Susan Cain, and Adam Grant).

Author and research scientist Janelle Shane tactfully breaks down the wonders and mostly hilarious shortcomings of “Artificial Intelligence” in You Look Like A Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place (Amazon). The applications it has for educators – well, there must be some, right?

Author and research scientist Janelle Shane tactfully breaks down the wonders and mostly hilarious shortcomings of “Artificial Intelligence” in You Look Like A Thing and I Love You: How Artificial Intelligence Works and Why It’s Making the World a Weirder Place (Amazon). The applications it has for educators – well, there must be some, right?

If you are looking for a fun and easy (and enthralling and informative) holiday read that will sharpen your party and family dinner conversation, I highly suggest you read this book. You will be laughing out loud at her illustrations and at some of the downright silly things her AI bots have come up with in their awkward phase of learning.

Yes, Shane is herself a teacher of emerging intelligences, as she describes in this recent 10-minute TED Talk, where she relates an experience she had with a group of middle school “coders” who were helping her train an A.I. (aka, neural net, machine learning algorithm – more on that later).

Shane and her human assistants were “teaching” the A.I. to come up with new names for home decorating paint colors by providing lots of examples for the A.I. to learn from. (See some of the AI’s suggestions at left – you might imagine why several of these were a big hit with 7th graders.)

Shane’s book title comes from one of her bots that she trained to write pick-up lines. It was among other gems like “You must be a tringle? Cause you’re the only thing here.” And “Hey, baby, you’re to be a key? Because I can bear your toot?” If you’re not blown away by those, you’ll love bot recipes for eggshell and mud sandwiches and whatever “Mestow Southweet With Minks and Stuff in Water pork, bbq” is.

Silliness aside, while reading You Look Like A Thing, I couldn’t help but draw parallels to our ABPC work and how kids (and teachers) learn best. The way humans and AIs learn are not dissimilar – it can mostly be traced back to how well we’ve prepared them for the task and whether we are asking them the right (or wrong) questions.

So far, AI is not so smart

Hollywood and sci-fi movies must accept some of the blame for exaggerating the AI threat or making us over-confident about what AI can accomplish. Shane doubts that we will be surrounded by sentient robot beings (think C3PO or Wall-E) anytime soon, given the ever-growing evidence that the human brain is complex far beyond our current ability to understand or describe it, much less duplicate it.

Today’s AI models lack general intelligence – a general “understanding” of the world – but they do have narrow special intelligences. Unlike old-school computer programs, AI doesn’t run on step by step instructions. It “learns from examples.” But it depends on humans to supply the examples and humans often overlook nuances their organic brains simply take for granted.

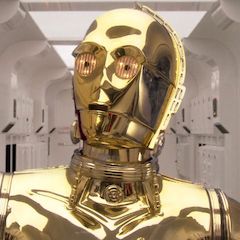

Shane points to a company that gave AI algorithms the task of searching among job applications for the best candidates. The AI chose to compare the resumes of current applicants to the applications of individuals who had been hired or rejected in the past. Seemed sensible. The AI found that candidates with the word “women” in their resumes (e.g., “Association of Women Engineers”) had seldom been hired, so it simply eliminated them from further consideration. Oops.

“If there is one thing we have learned…it’s that AI can’t do much without humans,” Shane writes. “Left to its own devices, at best it will flail ineffectually, and at worst it will solve the wrong problem entirely, which can have devastating consequences.”

Janelle Shane’s themes can be summed up in her Five Principles of AI Weirdness:

- The danger of AI is not that it’s too smart but that it’s not smart enough.

- AI has the approximate brain power of a worm.

- AI does not really understand the problem you want it to solve.

- But: AI will do exactly what you tell it to. Or at least try its best.

- And AI will take the path of least resistance.

What AI really is

A lot of what we may think is AI today is some mixture of rules-based programming, machine learning algorithms, and human input. To come up with Wall-E or C3PO (or even Arnold Schwarzenegger) would require an artificial brain with tens of billions of neural networks. By comparison, today’s typical AI has about the number of neurons found in a reasonably fat, juicy earthworm – about enough to win at chess but not enough to parse human behavior. If you find those online recommendations for books, movies, and consumer goods often seem strangely out of whack, now you know why. That’s artificial intelligence “figuring you out.” 🙂

A lot of what we may think is AI today is some mixture of rules-based programming, machine learning algorithms, and human input. To come up with Wall-E or C3PO (or even Arnold Schwarzenegger) would require an artificial brain with tens of billions of neural networks. By comparison, today’s typical AI has about the number of neurons found in a reasonably fat, juicy earthworm – about enough to win at chess but not enough to parse human behavior. If you find those online recommendations for books, movies, and consumer goods often seem strangely out of whack, now you know why. That’s artificial intelligence “figuring you out.” 🙂

So while it is all well and good to be cautiously polite to our AI and say “thank you” in your Siri and Alexa and Google homes after they successfully set that timer you asked for (looking at you, Dad), it is not likely they’ll “remember you’re one of the good ones” when we all live through our own version of Terminator.

Fortunately, SkyNet is not likely to take over soon. Unfortunately, as Shane notes, the real danger is AI knowing too little, not too much. In simulations, Shane says, AI has been known to select “kill the humans” as the simplest solution to some rather benign problems. A rare occurrence but a good example of the flaws in AI learning.

So, how do AIs problem-solve?

AIs, like some humans, take shortcuts when they are learning. They are not being mischievous or vindictive or trying to cheat. They are simply performing the task you asked them to perform. It’s not their fault you assumed they would come at the problem like a human would.

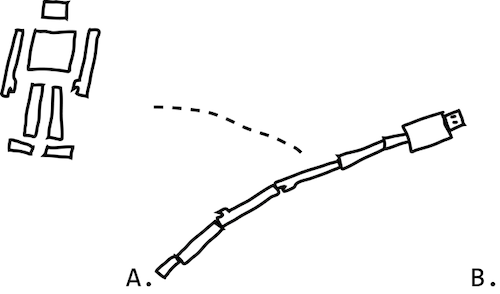

EXAMPLE: If you were to give a human the problem of getting a robot from point A to point B, you would expect them to build a traditional humanoid robot with arms and legs to walk from point A to point B, or maybe even build some sort of vehicle with wheels. Pretty simple, right?

Shane says if we give the same problem to an AI and don’t tell it specifically what to build, it will usually build a robot that assembles itself into a sufficiently tall tower and then falls over…from point A to point B. This effectively solves the problem you asked it to solve, but not with the results you might have expected.

There are countless other examples of this kind of “alternative problem solving” from AIs. When asked to identify pictures of sheep, after being given a data set of plenty of pictures of sheep, AIs started identifying sheep in any picture they saw with grass or bushes in the background. Instead of focusing on the sheep, they noticed the similar backdrops and made this association instead.

When asked to find and remove discrepancies in a list of numbers, an AI might delete the number set entirely, effectively getting rid of any mistakes. When asked to die as few times as possible in a video game, one AI paused the game for eternity so that it would never die.

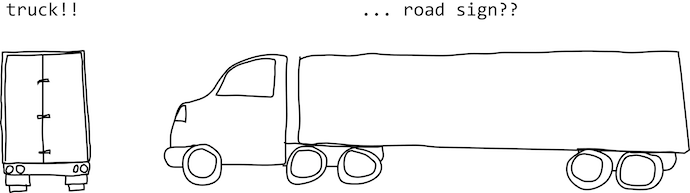

Outside of simulations, in extreme and rare circumstances, there have been disastrous consequences to human short sightedness in programming AI. In one famous 2016 fatality, an auto-driving car (intended to ONLY be driven on super highways) failed to stop for a freight truck coming across a secondary road because it mistook it for an overhead interstate sign. It had never been shown the sides of trucks, only the fronts and backs.

What’s the take-away for educators?

Learning about AI teaches us more about ourselves and how we teach others. We make assumptions that they will know things without being told or taught. Or we give them examples that are obvious to us but befuddling to them.

If they don’t know what you are asking they will make their own assumptions from previous learning or get distracted. Asking the right questions is key. So is formative assessment and feedback. If we don’t pay attention, they may grow up to precipitate unfortunate events.

“AI is prone to solving the wrong problem, because it doesn’t have the context to understand what you’re trying to get it to do. That’s a human-level task.”

Perhaps the most shocking thing about AIs? They can pick up our bias and prejudices from datasets, even when we don’t want them to. Remember the story I mentioned about the AI sorting job applications and deleting the women? It’s part of a pervasive issue, Shane says.

AIs have also been found to penalize applicants with ethnic names and people who lived in certain zip codes. One even decided that the thing that made a candidate most qualified to work for a particular company was to be named Jared and have the word lacrosse on your resume. AIs learn from the information you give them – even if you hide race and gender from the applications, the AI algorithm may find a way (zipcodes, names, word choice) to discriminate against humans even if it has no concept of what a human is or what discrimination is. It’s learned the patterns from the humans. There’s an educational lesson there, for sure, but that’s a whole other blog entirely.

If you want to learn more, pick up Janelle Shane’s book. It’s fascinating. My big take-away? We want to give both kids and AIs the tools to create a more harmonious future, but we need to know what we want them to do and make sure THAT is what we’re teaching them.

As AI is showing us, these innocent intelligences that surround us aren’t in stasis – they are constantly creating patterns of their own, often reflecting our unspoken values. What do we really want to pass on to them and what do we really want them to do with that information?

Emily Strickland is Program Coordinator for the Alabama Best Practices Center. A native of Montgomery, she attended Saint James School from kindergarten through graduation and still talks to her best friends from primary school every day. In 2012, she earned a bachelor’s degree in English from Auburn University and worked for several nonprofits before joining ABPC in 2017. Reach her via email at [email protected].

0 Comments on "Review: You Look Like A Thing And I Love You: How Artificial Intelligence Works"